autograd

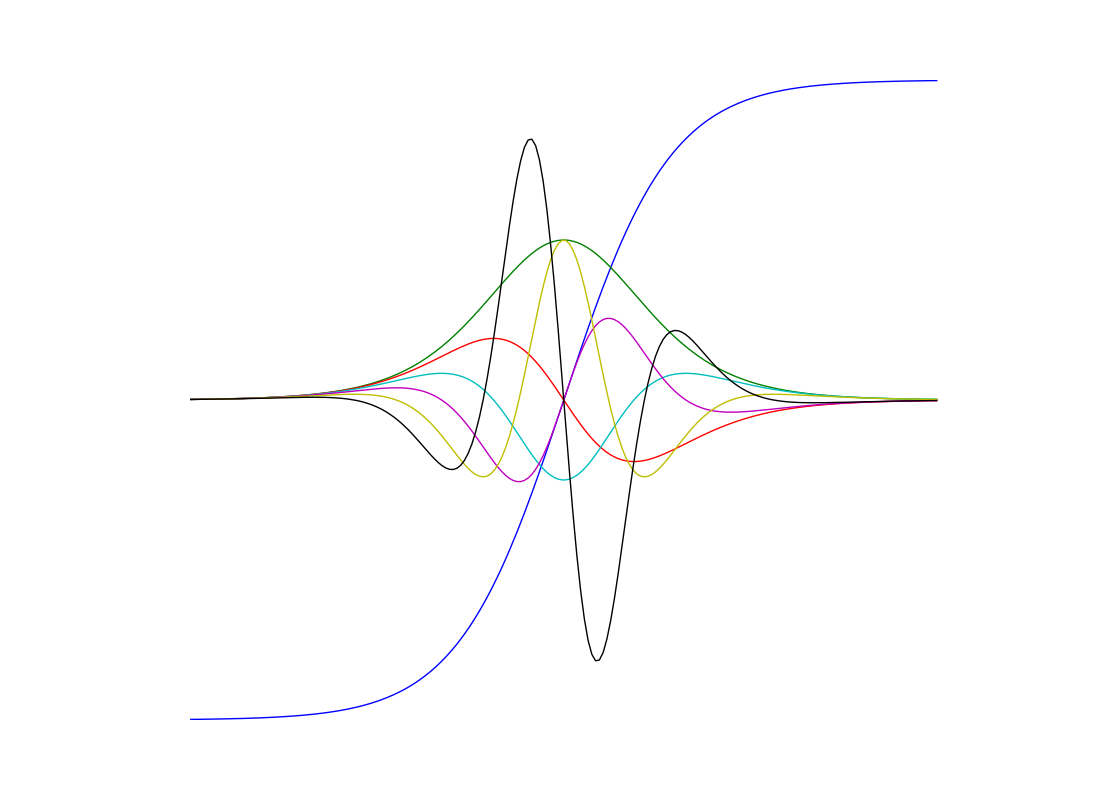

Have you ever wanted to use automatic differentiation but your code is already written in numpy? Or just don’t want to learn another library? Today we are showcasing HIPS/autograd, which will allow you to do just that. That’s right, just write a function in numpy, and get all of its gradients for free.

We encourage you to contribute to the research software encyclopedia and annotate the respository:

- What is statsmodels?

- How do I cite it?

- How do I contribute to the software survey

- How do I get started

- Where can I learn more?

What is Autograd?

What do you mean get gradients for free?

Autograd has a thin wrapper for numpy. you’d just import it instead of the usual numpy, and not have to change any of your actual numpy code. From their README:

>>> import autograd.numpy as np # Thinly-wrapped numpy

>>> from autograd import grad # The only autograd function you may ever need

That’s it! We are now ready to differentiate functions.

>>> f = lambda x: np.sin(x*2) / x

>>> grad(f)(1.0)

-1.7415910999199666

You can apply it as many times as you want.

But can I …

Autograd has a rich set of features. It supports multi-variable differentiation (so it can be used for backpropagation), and even control flow operations including branches, recursion, loops, and clojures. Here are some examples I tried:

Control flow:

>>> def f(x):

... if x < 0.0:

... return f(-x)

... while x > 10:

... x /= 10

... return np.power(x, 1.5)

...

>>> grad(f)(-10003.0)

-0.00015002249831275308

Jacobian:

>>> from autograd import jacobian

>>> f = lambda x: np.array(

... [2 * x[0]**2 + 3 * x[1], np.cos(x[0]) + np.sin(x[1])])

>>> jacobian(f)(np.array([2.0, 3.0]))

array([[ 8. , 3. ],

[-0.90929743, -0.9899925 ]])

Complex-valued function of multiple variables:

>>> from autograd import holomorphic_grad

>>> f = lambda x: x[0]*2 + x[1]

>>> holomorphic_grad(f)(np.array([6.0j, 1.0j]))

array([2.+0.j, 1.+0.j])

But does it scale?!

That’s the milion dollar question these days. Luckily for us, the vision of Autograd is being carried on in the high-performance context with JAX, which utilizes XLA (Accelerated Linear Algebra), originally developed for Tensorflow. Although this is still a young library, it promises a lot by combining the best of both worlds.

How do I cite it?

You can cite autograd as follows, and a pdf of the paper is available here:

@inproceedings{maclaurin2015autograd,

title={Autograd: Effortless gradients in numpy},

author={Maclaurin, Dougal and Duvenaud, David and Adams, Ryan P},

booktitle={ICML 2015 AutoML Workshop},

volume={238},

pages={5},

year={2015}

}

How do I get started?

- Autograd Documentation has a nice tutorial of implementing logistic regression from start to finish, and lots of other useful information.

How do I contribute to the software survey?

or read more about annotation here. You can clone the software repository to do bulk annotation, or annotation any repository in the software database, We want annotation to be fun, straight-forward, and easy, so we will be showcasing one repository to annotate per week. If you’d like to request annotation of a particular repository (or addition to the software database) please don’t hesitate to open an issue or even a pull request.

Where can I learn more?

You might find these other resources useful:

- The Research Software Database on GitHub

- RSEpedia Documentation

- Google Docs Manuscript you are invited to contribute to.

- Annotation Documentation for RSEpedia

- Annotation Tutorial in RSEng docs

For any resource, you are encouraged to give feedback and contribute!

Recent Posts

- Posted on 21 Mar 2021

- Posted on 07 Mar 2021

- Posted on 21 Feb 2021

- Posted on 21 Feb 2021