ann-benchmarks

Today’s post is all about benchmarking. We’ll focus on erikbern/ann-benchmarks, which is project used to evaluate approximate nearest neighbor searches (ANN).

What is ANN? In many applications we want to solve the nearest neighbor problem – given some point in the dataset, what are other points closest to it? For example, if you are listening to one song in a dataset and want recommendations for similar songs, you might want to look at its nearest neighbors! People have found ways to speed up nearest neighbor searches using approximation methods, which are often good enough. Hence, ‘Approximate Nearest Neighbors’!

Do you have an opinion? We encourage you to contribute to the research software encyclopedia and annotate the respository:

otherwise, keep reading!

- What is ann-benchmarks?

- How do I cite it?

- How do I get started?

- How do I contribute to the software survey

- Where can I learn more?

What is ann-benchmarks?

Benchmarking approximate algorithms has unique difficulties. Usually when benchmarking a deterministic algorithm, we might only care about how long it takes on particular hardware. Or if we’re doing data science, we don’t care much about how long it takes – only on some statistical measures instead! But for ANN we need a combination of these two points of view.

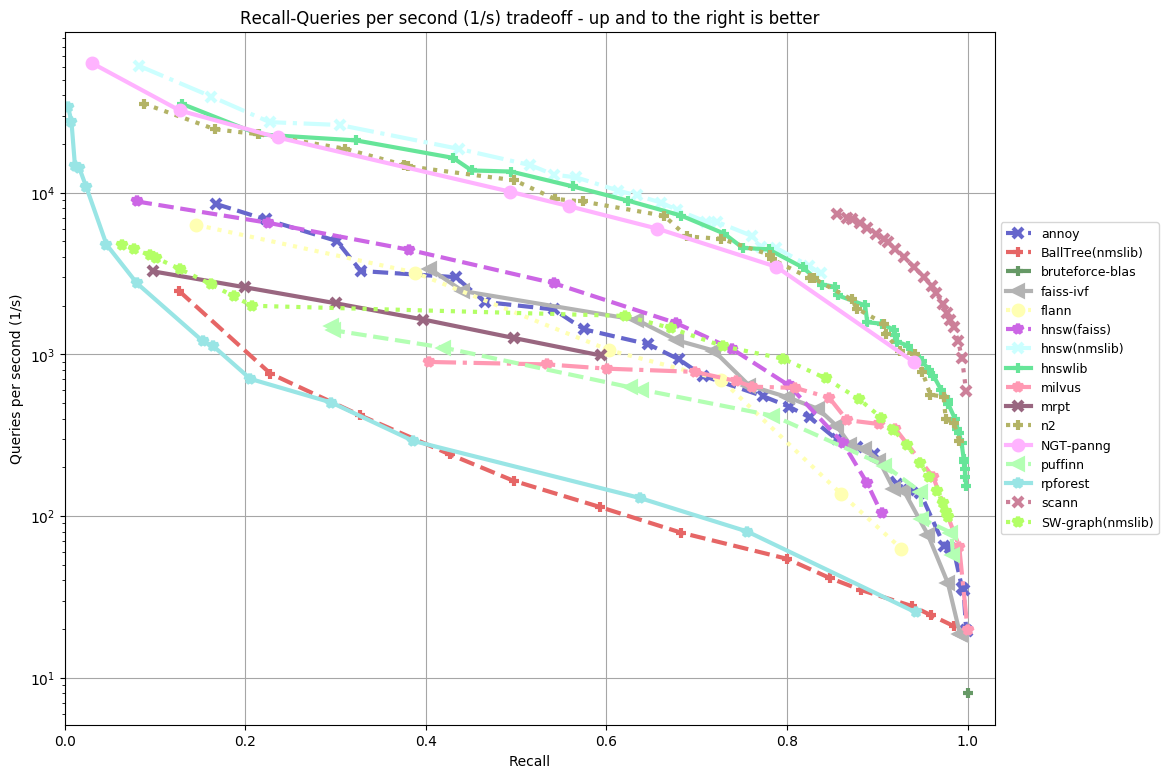

ann-benchmarks invents a way to combine these two points of view: It plots queries per second (roughly speed) versus recall (roughly how many of the nearest neighbors did we get). This will vary depending on hardware, so a number of leading algorithms have been placed in Docker containers, along with a handful of representative data sets (different algorithms can perform differently depending on features of the data set, like its dimension).

The only dependencies are Python 3.6 and Docker. Just clone and install the package. Running it is easy (although running all algorithms on all data sets can take days!). You can configure a YAML file to specify datasets. An example invocation:

python run.py --dataset glove-100-angular

In this case, the script would run all algorithms on the ‘glove-100-angular’ dataset.

Why is it useful?

This repo is very nice for those interested in benchmarking ANN on their data with their own hardware given how easy it is to use. It also represents best practices in reproducibility by running each algorithm in a container. I hope we will see this trend continue for benchmarking other algorithms (especially approximate algorithms that have unique challenges)!

Also check out ann-benchmarks.com for an extensive list of results across the various algorithms and datasets used.

How do I cite it?

There is an accompanying paper paper, which you can cite using:

@inproceedings{aumuller2017ann,

title={ANN-benchmarks: A benchmarking tool for approximate nearest neighbor algorithms},

author={Aum{\"u}ller, Martin and Bernhardsson, Erik and Faithfull, Alexander},

booktitle={International Conference on Similarity Search and Applications},

pages={34--49},

year={2017},

organization={Springer}

}

How do I get started?

- README Documentation is always a good place to start.

- ann-benchmarks.com is the main site.

How do I contribute to the software survey?

or read more about annotation here. You can clone the software repository to do bulk annotation, or annotation any repository in the software database, We want annotation to be fun, straight-forward, and easy, so we will be showcasing one repository to annotate per week. If you’d like to request annotation of a particular repository (or addition to the software database) please don’t hesitate to open an issue or even a pull request.

Where can I learn more?

You might find these other resources useful:

- The Research Software Database on GitHub

- RSEpedia Documentation

- Google Docs Manuscript you are invited to contribute to.

- Annotation Documentation for RSEpedia

- Annotation Tutorial in RSEng docs

For any resource, you are encouraged to give feedback and contribute!

Recent Posts

- Posted on 21 Mar 2021

- Posted on 07 Mar 2021

- Posted on 21 Feb 2021

- Posted on 21 Feb 2021